Last week, I posted five literacy education pet peeves. I whined about the lack of balance in balanced literacy; calls to end the reading wars that fail to address their root cause; the use of research to cover one’s tracks rather than to support sound decisions; the use of drive-by conferencing in place of deep discussions of text; and instructional schedules tuned to teachers’ comfort levels rather than kids’ learning needs.

As promised, here are five more.

Pet Peeve #6: Research claims based on the wrong kinds of research.

Recently, a claim of mine was challenged on Twitter. Someone pointed out that there was research supporting an approach to literacy teaching that I had deprecated. He essentially, wrote, “You’re wrong. Research shows that this approach works.”

The right and wrong of this exchange isn’t important here (though I was right). But it reveals a basic communications problem inherent in literacy discussions.

What does it mean to say that an instructional approach “works” in the teaching of reading?

The problem is that there are two different meanings.

“It works” can mean that kids (or some kids) can or will learn to read from some teaching approach. If that approach is used, many children are likely to become readers.

That’s the meaning that many practitioners think of.

Another possibility, however, is that the approach works better than another. That’s what researchers usually mean.

For instance, when we do studies evaluating the effectiveness of an instructional approach, we must show that it outperforms something else. These days studies compare the approach in question with what are referred to as “business as usual” approaches (BUA). If we can’t demonstrate that students do better than with BUA classroom practices, then the practice doesn’t work. That doesn’t mean that no one learns anything from it, just that they do no better than they would without it.

In my Twitter argument, my antagonist referred to some observational studies which made no comparisons at all. The studies provided detailed descriptions of how reading was taught in some high achieving schools. The conclusion was that these were effective practices since the kids were learning so well.

The problem with that conclusion is that it ignores all other sources of learning.

Control groups and comparison groups are what allow us to separate out influences like maturation and out-of-school experiences.

I checked out one of those observational studies and found that the family incomes in those schools were 42% higher than the averages, and 150% more of the parents were highly educated professionals (e.g., doctors, lawyers, professors than would be typical. Do you think there is any possibility that the parents may have had something to do with their children’s remarkable success?

Observational studies have a purpose, but it is not to determine whether a teaching approach provides learning advantages.

Teachers may make the same mistake when evaluating their own efforts. They may conclude that an approach “works” solely based on personal experience. They can see that their students are learning, but their observations cannot reveal why. Without a comparison group we can’t know if kids would have done even better with some other approach.

Please don’t argue for the superiority or relative value of any kind of instruction without appropriate comparative data.

Pet Peeve #7: Teaching reading comprehension by asking certain kinds of questions.

Here is another issue that I get a lot of mail about. Principals (and sometimes teachers) are often seeking either testing or instructional materials that will allow them to target specific reading comprehension standards or question types from their state’s reading assessment.

Those requests seem to make sense, right?

They want to know which comprehension skills their kids haven’t yet accomplished and asking questions aligned with those skills should do the job, they presume. Likewise, having kids practice answering the kinds of questions the tests will ask should improve reading comprehension performance. Again, it looks smart. It seems like a great idea to have kids practice answering those kinds of questions they’ll have to answer on the state tests.

My mama told me that just because something seems right doesn’t make it right.

She was right in this case. There is no evidence that these so-called comprehension skills even exist. There is, in fact, considerable evidence that they don’t (Shanahan, 2014; Shanahan, 2015).

Study after study (and the development of test after test) for more than 80 years have shown that we cannot even distinguish these question types one from another. Likewise, there is no evidence that we can successfully teach kids to answer the types of questions used on tests.

If you really want your kids to excel in reading, get them challenging texts. Then engage them in discussions of those texts. Get them to write in response to the texts. Reread the texts and talk about them again. Come back to them later to compare with other texts or have them synthesize the info from multiple texts for presentations or projects.

Ask them questions that are relevant to the understanding of those texts. Don’t worry about the question types. Worry about whether they are arriving at deep interpretations of the texts and whether they can use the information. Reading comprehension is about making sense of texts, not about answering certain types of questions.

Pet Peeve #8: Teaching reading with books that are too easy.

One problem with this peeve is that what I’m complaining about has a bit of truth behind it.

The original idea of teaching with “instructional level” texts was reasonable enough. If kids find reading too difficult, they won’t engage in it, and if they find it too easy, they won’t gain much from engaging in it. There are research studies on teaching (not necessarily the teaching of reading) that say that if there is too much difficulty, students withdraw rather than learning (difficulty and learning). Protecting against that problem makes sense.

How to ensure that learning is neither too onerous nor inconsequential? Answering that question is where things went afoul.

We got too formulaic. We set text placements mechanically, with no real justification. For years, teachers have been told to place kids in texts they could read with 95-98% accuracy and 75-89% reading comprehension. I’ve long criticized those levels as being too easy (Shanahan, 2019; Shanahan, 2020. They put kids in books that they can already read reasonably well.

There are other things we can do to ensure success without putting kids in such easy books.

How about placing kids in challenging books and then providing adequate scaffolding and support so that they are not overwhelmed?

Recently, proponents of some of these instructional level schemes have started telling their adherents that the proper book level for kids is one in which they can read 90% of the words correctly (instead of the previous 95%). That should provide a shift to harder books, but an arbitrary one at best (and one in no better alignment with research findings). Even more important, there are no studies showing that this kind of book matching is beneficial. Most important though, this arbitrary change provides no direction as to how to provide students with appropriate and adequate supports for reading these harder books successfully.

Too many kids are being taught reading today with books they can already read reasonably well. As long as that continues, it will be difficult if not impossible to raise national reading levels.

Pet Peeve #9: Efforts to control the difficulty of children’s independent reading.

Just as the instructional level idea has limited kids’ opportunity to learn from reading instruction, there are efforts afoot to limit the challenges of children’s independent reading.

Personal reading should be personal.

Limiting students’ reading choices to texts at Level H, and so on are boneheaded. There is no research supporting these prohibitions. I often hear from parents who are upset that their children aren’t allowed to read books that interest them because the books are supposedly too hard.

Some commercial programs direct teachers to curb kids’ ambitions in this regard, and others that do so through the use systems of testing, point assignments, and rewards.

Rather than limiting kids to books they can read easily, it would be better to study the research on motivation which suggests that curiosity and challenge can be real motivators.

Pet Peeve #10: Those who promote the “science of reading” but then sneak in approaches not supported by research.

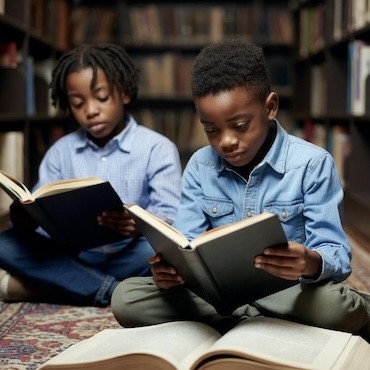

I’m a big science of reading instruction guy. I’ve been chagrined as American reading scores have languished while students from other nations have progressed. It is especially upsetting given the reading proficiency gaps that divide us racially, linguistically, and economically.

The best way to raise achievement is to adhere to the research; to provide students with the approaches found to be most effective in terms of promoting learning.

It has been great to hear parents, school boards, and state legislatures calling for reliance on the science of reading.

However, not everyone who promotes that idea is especially serious about it. For example, they’ll insist that phonics instruction is supported by science (indeed, it is), but then sneak in stuff like sound walls, decodable text, and extra heavy doses of phonemic awareness instruction with no science in sight.

Nothing wrong with arguing for any of those practices, but there is a real “truth in advertising” problem when those are advanced under the science of reading flag.

Such promotions should carry disclaimers that separate out the science from the ideas that the promoters happen to like.

Some experts who play this game tell me that they know there isn’t evidence supporting their contentions. But they argue that it is okay to do so since “it is logical” that their nostrums work. Maybe they do and maybe they don’t, but they still should separate out their claims, rather than misleading parents, practitioners, and policymakers.

Comments

See what others have to say about this topic.